The case of Netflix

- Sparse-aware

- IO agnostic

- Single-machine

Vadim Markovtsev - Codemotion Madrid, 2017

Vadim Markovtsev

Source

Source

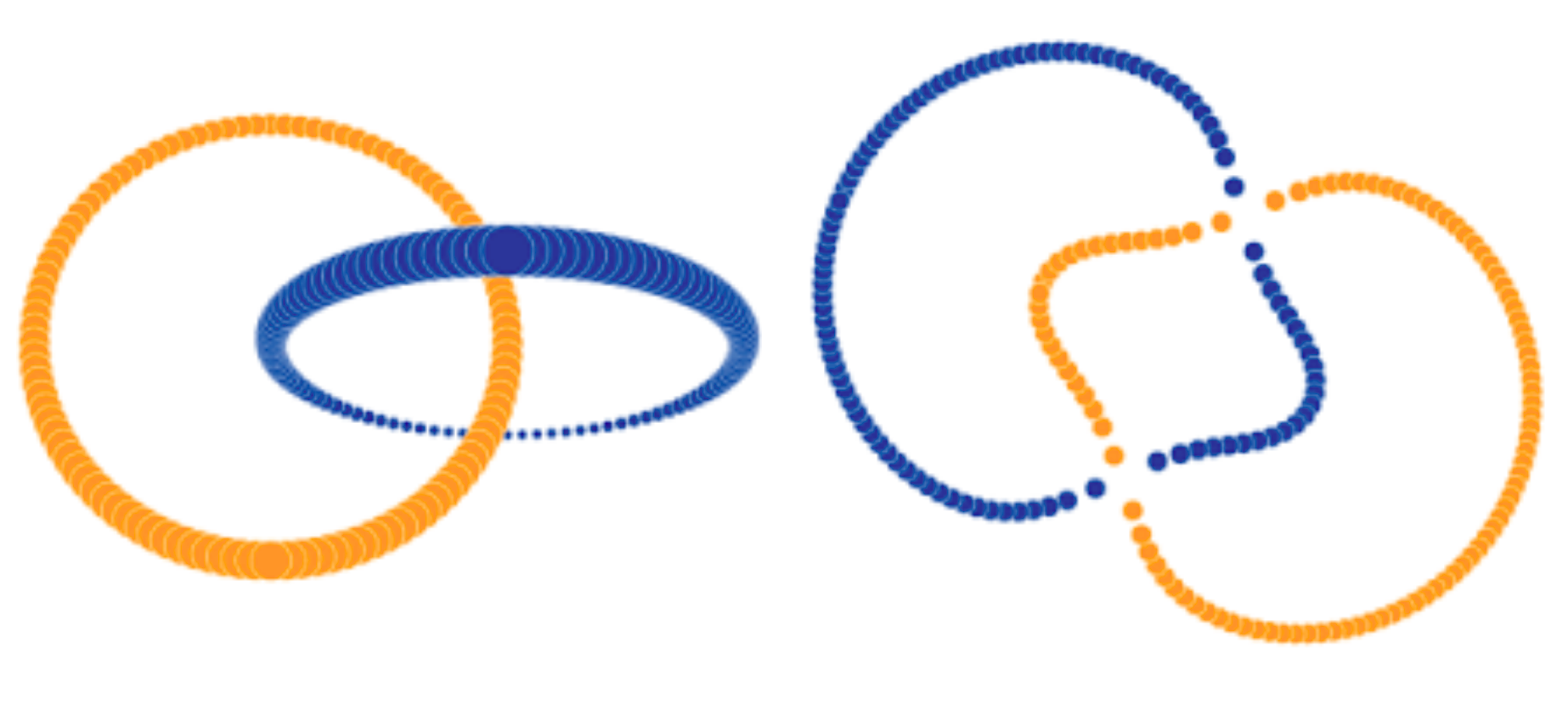

Approach combines the advantages of the two major model families:

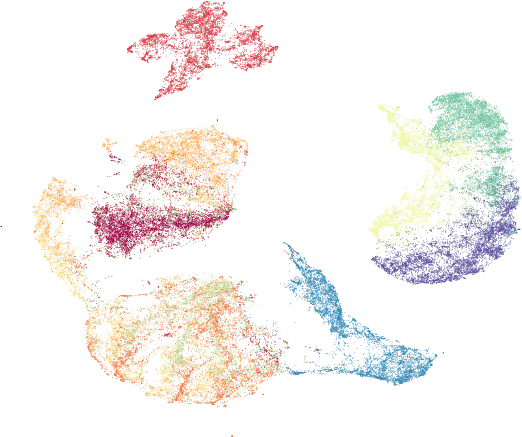

Example:

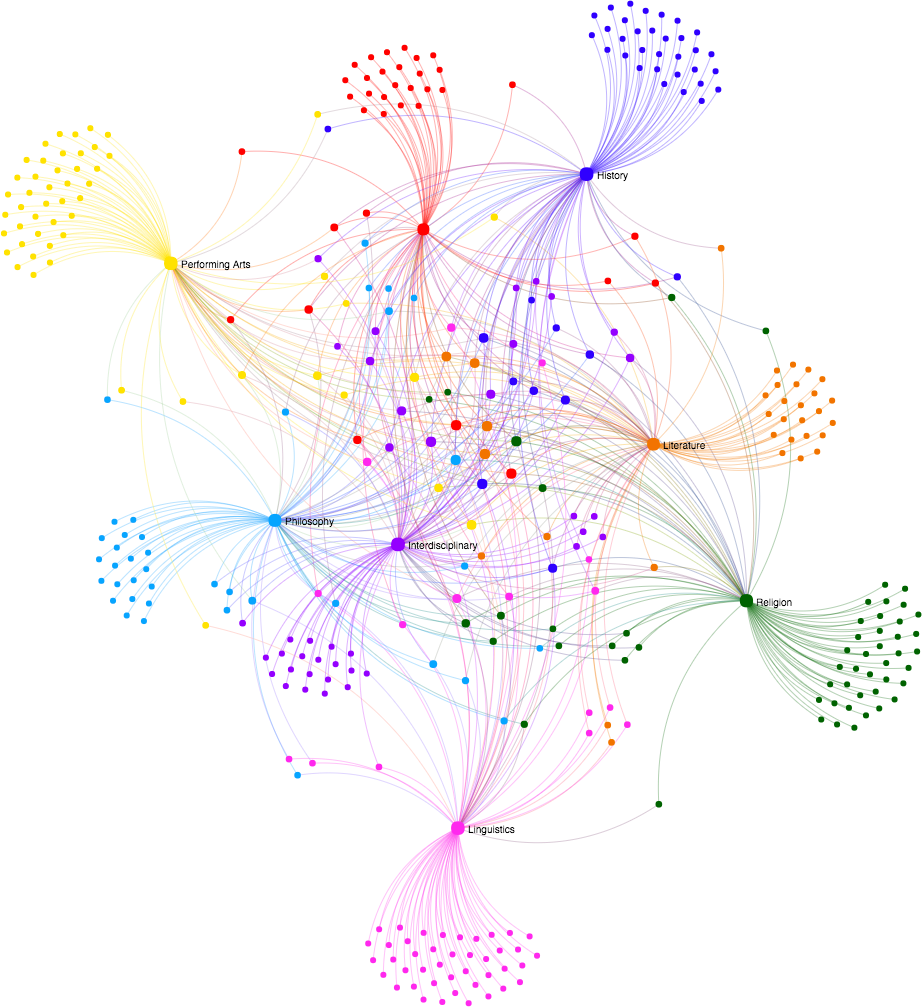

Embedding engine which works on co-occurrence matrices.

Like GloVe, but better - Paper on ArXiV.

We forked the Tensorflow implementation.

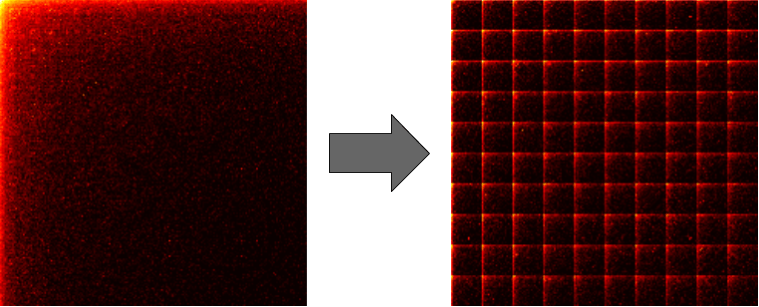

Problems

Properties:

Approach:

Task definition:

Why was it introduced?