The case of Netflix

- Sparse-aware

- IO agnostic

- Single-machine

Vadim Markovtsev, Egor Bulychev - M3 London, 2017

Vadim Markovtsev

Egor Bulychev

source{d}

2017

▼

Source

Source

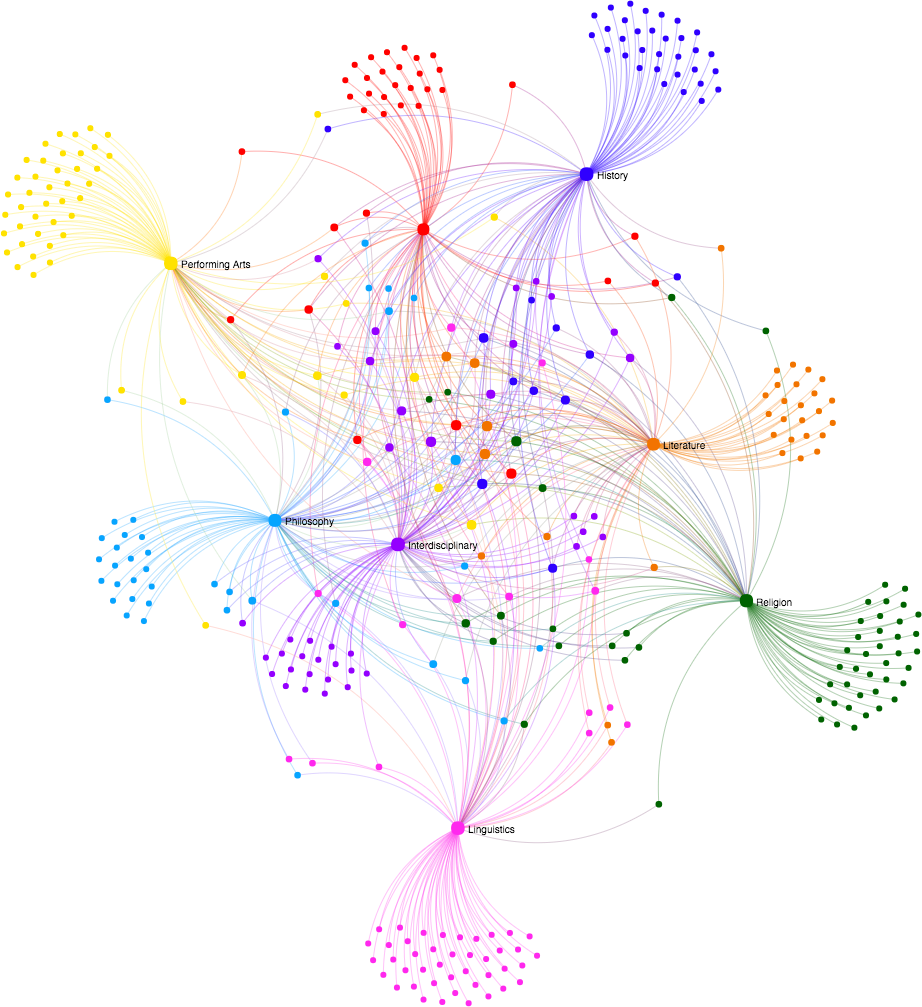

Approach combines the advantages of the two major model families:

Example:

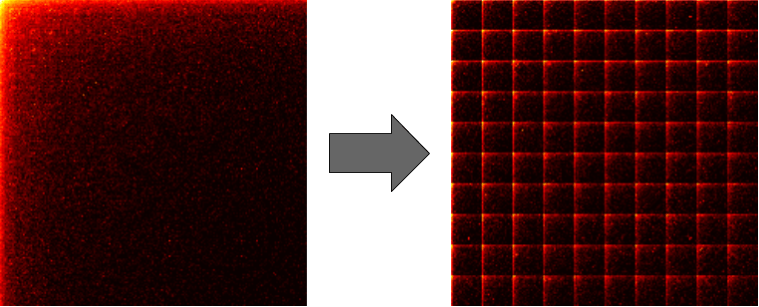

Embedding engine which works on co-occurrence matrices.

Like GloVe, but better - Paper on ArXiV.

We forked the Tensorflow implementation.

Problems

Properties:

Approach:

Domains:

Why not collaborative filtering?

Task definition:

Why was it introduced?

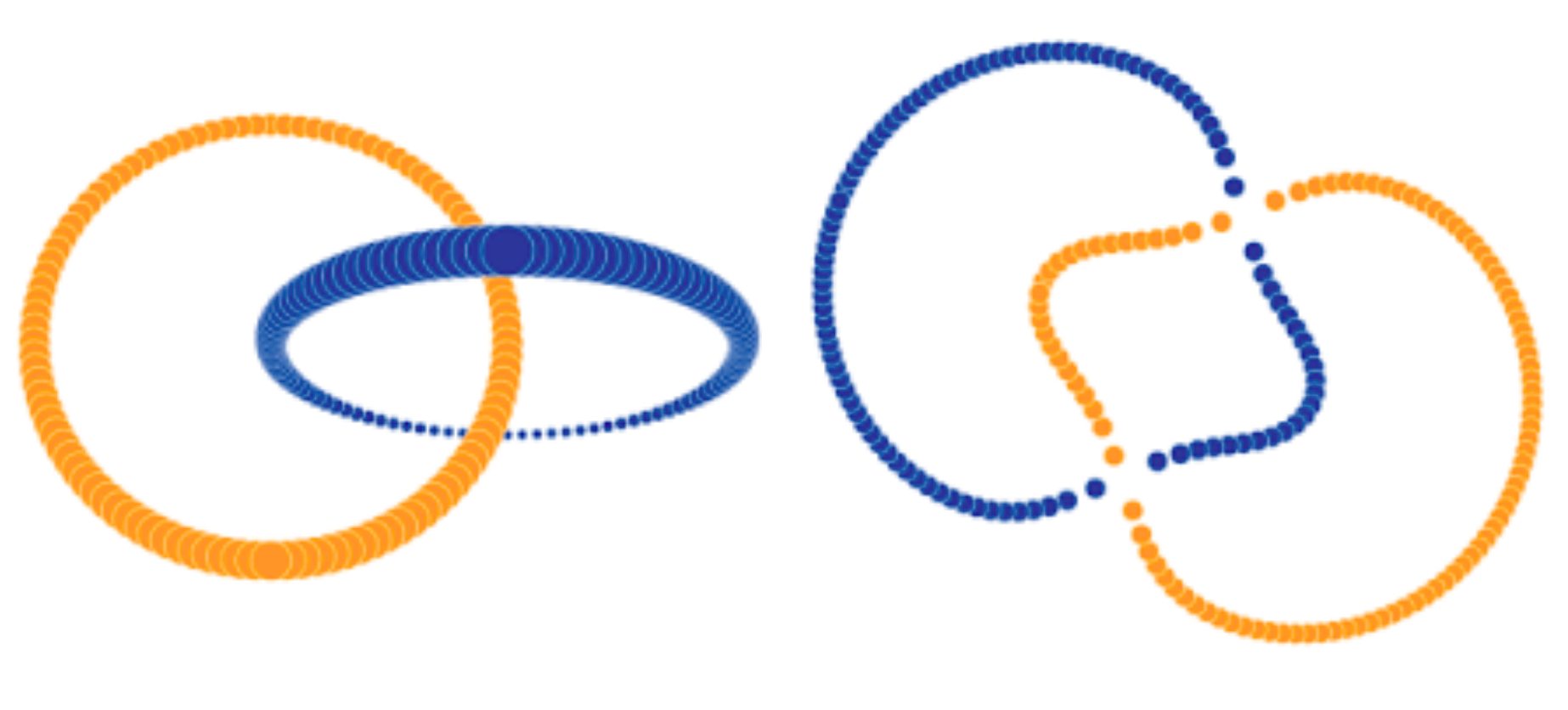

Large scale t-SNE replacement.