Source code abstracts classification using CNN

Vadim Markovtsev, source{d}

Vadim Markovtsev, source{d}

Vadim Markovtsev, source{d}

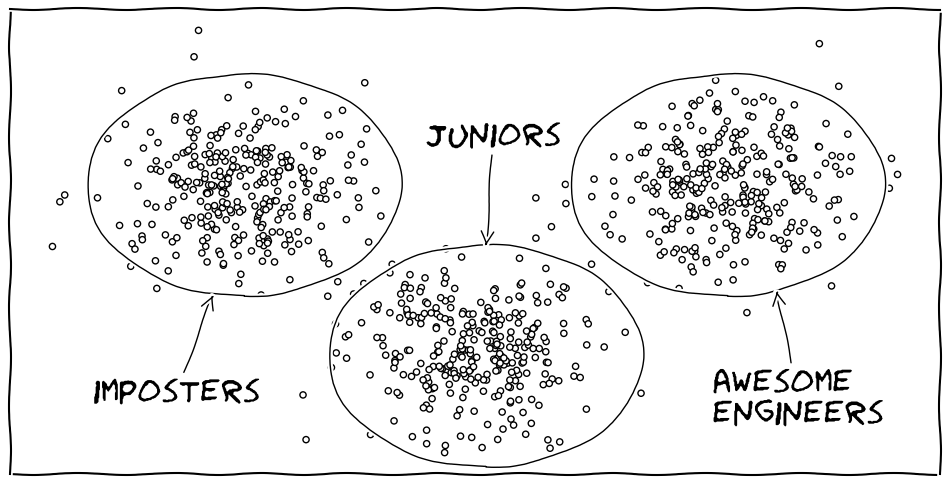

Everything is better with clusters.

Customers buy goods, and software developers write code.

So to understand the latter, we need to understand what and how they do what they do. Feature origins:

Let's check how deep we can drill with source code style ML.

Toy task: binary classification between 2 projects using only the data with the origin in code style.

Requirements:

(1) and (2) are solved by github/linguist and source{d}'s own tool

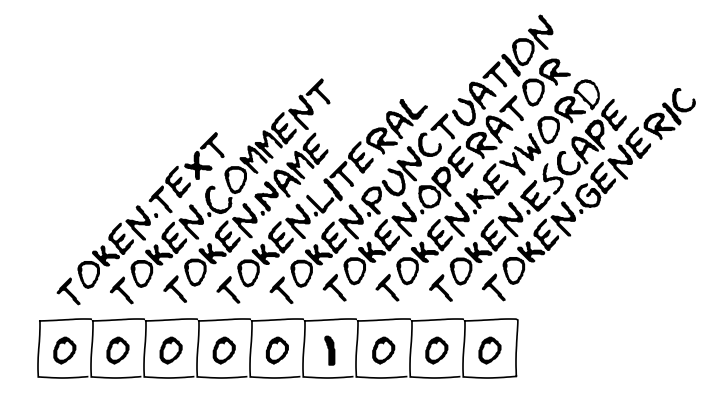

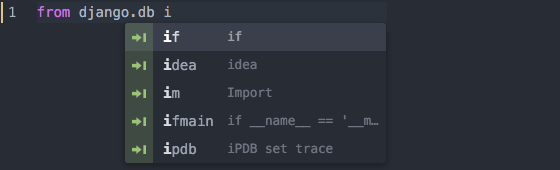

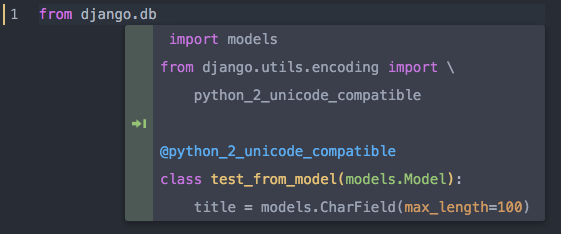

Pygments example:

# prints "Hello, World!"if True:print("Hello, World!")

# prints "Hello, World!"

if True:

print("Hello, World!")Token.Comment.Single '# prints "Hello, World!"'Token.Text '\n'Token.Keyword 'if'Token.Text ' 'Token.Name.Builtin.Pseudo 'True'Token.Punctuation ':'Token.Text '\n'Token.Text ' 'Token.Keyword 'print'Token.Punctuation '('Token.Literal.String.Double '"'Token.Literal.String.Double 'Hello, World!'Token.Literal.String.Double '"'Token.Punctuation ')'Token.Text '\n'

Though extracted, names as words may not used in this scheme.

We've checked out two approaches to using this extra information:

| layer | kernel | pooling | number |

|---|---|---|---|

| convolutional | 4x1 | 2x1 | 250 |

| convolutional | 8x2 | 2x2 | 200 |

| convolutional | 5x6 | 2x2 | 150 |

| convolutional | 2x10 | 2x2 | 100 |

| all2all | 512 | ||

| all2all | 64 | ||

| all2all | output |

| Activation | ReLU |

|---|---|

| Optimizer | GD with momentum (0.5) |

| Learning rate | 0.002 |

| Weight decay | 0.955 |

| Regularization | L2, 0.0005 |

| Weight initialization | σ = 0.1 |

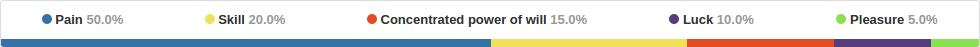

| projects | description | size | accuracy |

|---|---|---|---|

| Django vs Twisted | Web frameworks, Python | 800ktok each | 84% |

| Matplotlib vs Bokeh | Plotting libraries, Python | 1Mtok vs 250ktok | 60% |

| Matplotlib vs Django | Plotting libraries, Python | 1Mtok vs 800ktok | 76% |

| Django vs Guava | Python vs Java | 800ktok | >99% |

| Hibernate vs Guava | Java libraries | 3Mtok vs 800ktok | 96% |

Conclusion: the network is likely to extract internal similarity in each project and use it. Just like humans do.

If the languages are different, it is very easy to distinguish projects (at least because of unique token types).

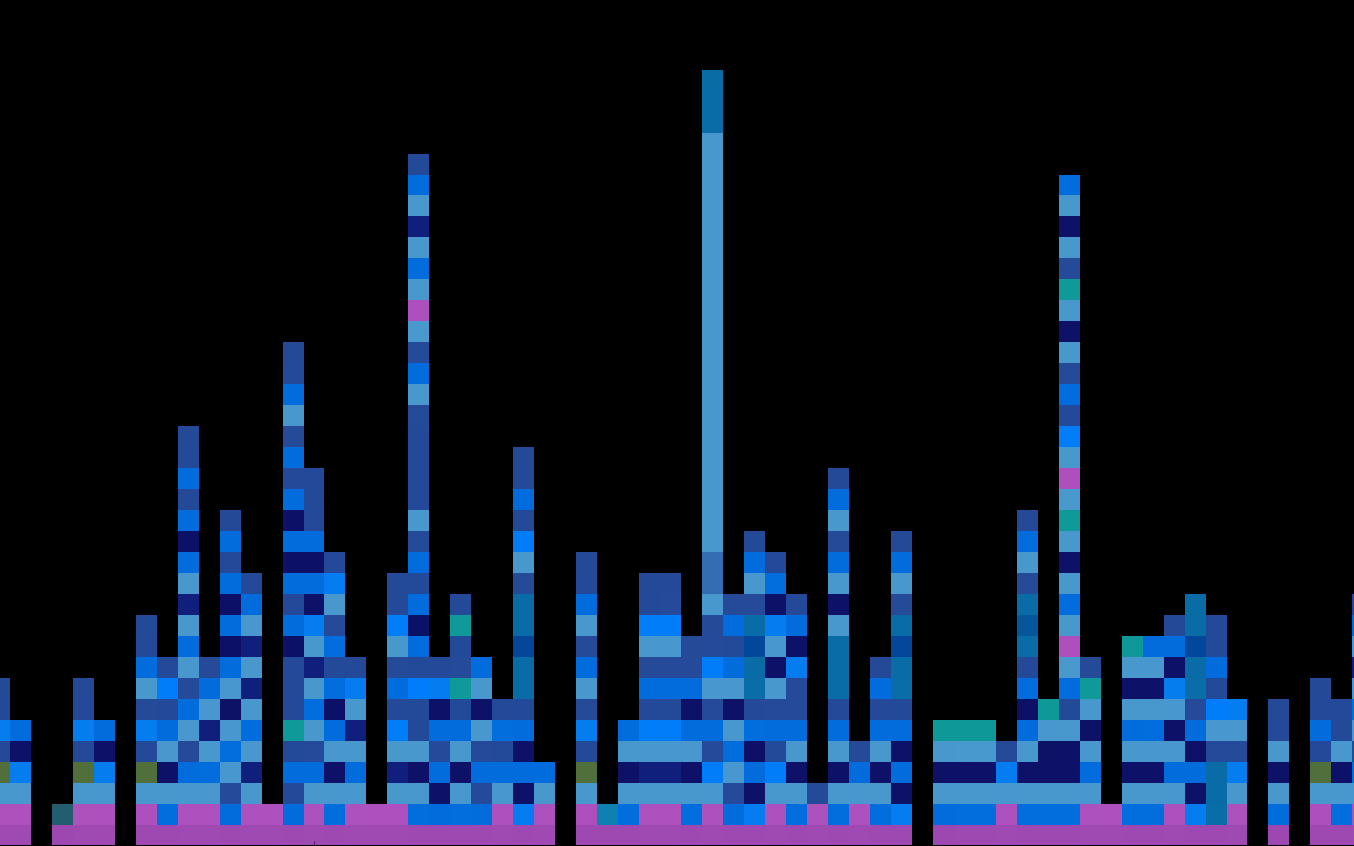

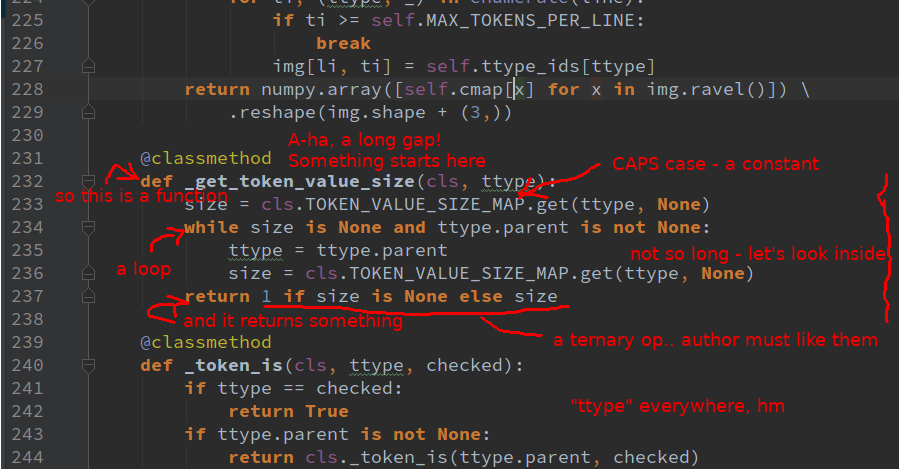

Problem: how to get this for a source code network?

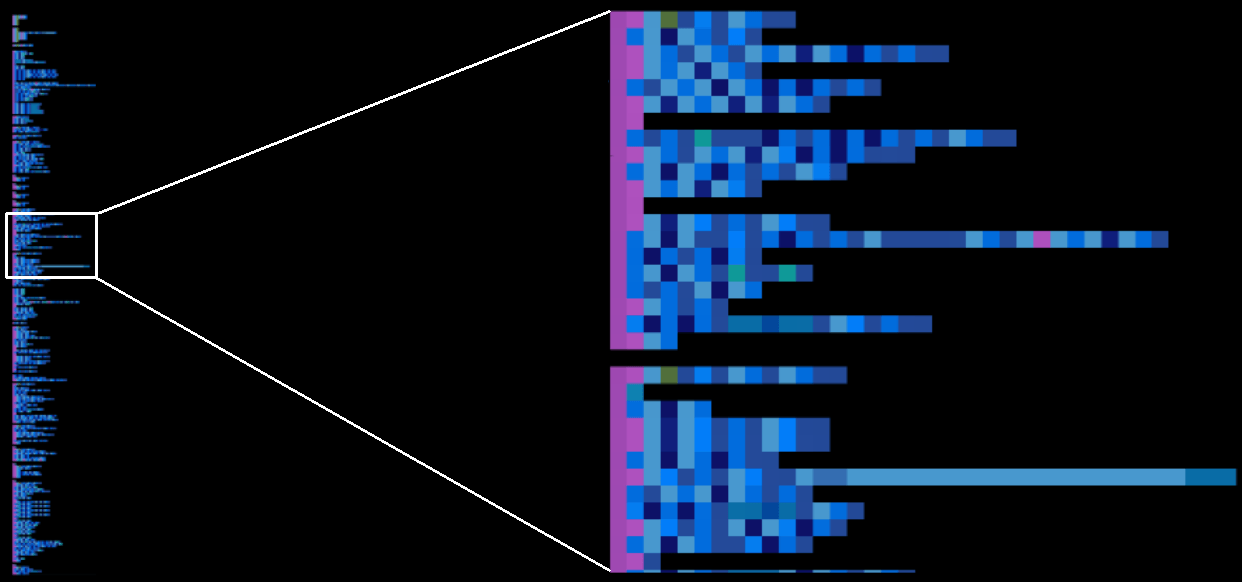

GitHub has ≈6M of active users (and 3M after reasonable filtering). If we are able to extract various features for each, we can cluster them. Visio:

BTW, kmcuda implements Yinyang k-means.

We are hiring!